Alexander Long

Applied Scientist at Amazon.

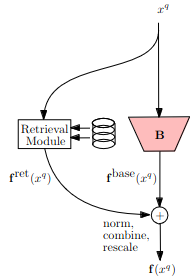

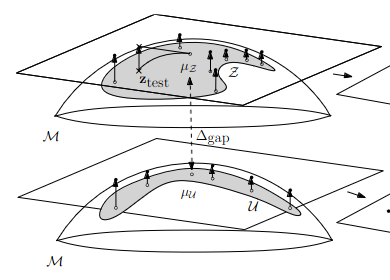

I work in a team of 14 Deep Learning PhDs, focusing on high-impact projects within the International Stores Org. We are responsible for carrying out science design and engineering, taking models to production, and are expected to publish research in tier-1 venues. My research focus is in retrieval augmentation and sample-efficient adaptation of large multi-modal foundation models. Prior to Amazon I completed my PhD in 2021 on External Non-parametric Memory in Deep Learning at UNSW, and before that a Masters in EE with a thesis on humanoid robotics at TUM, Germany.

The group is currently recruiting interns - please reachout if you are located in Australia and have recent first author papers in NeurIPS/ICML/ICLR/CVPR/ACL etc.

latest posts

| Jan 27, 2024 | a post with code diff |

|---|---|

| Jan 27, 2024 | a post with advanced image components |

| Jan 27, 2024 | a post with vega lite |